Research and Development

- Title: SpokHands

- Autor: Greg Beller

- First Version used in public the 8th of June, 2011

- more info

Aerial Percussions

In Babil-on and in Luna Park, the percussionist Richard Dubelsky literally speaks with his hand gestures performing aerial percussions. His hands are equipped with two movement sensors, developped by Emmanuel Flety at Ircam, allowing him to trigger and modulate sound engines in real-time:

These generated sounds are the result of concatenating pre-recorded and pre-segmented sounds, rendered on the fly using MuBu for Max objects. Instrumental sounds such as flute are randomly picked whereas vocal sounds, such as syllables for example, are chosen wisely by the program following certain criteria.

Real-time Speech Synthesis

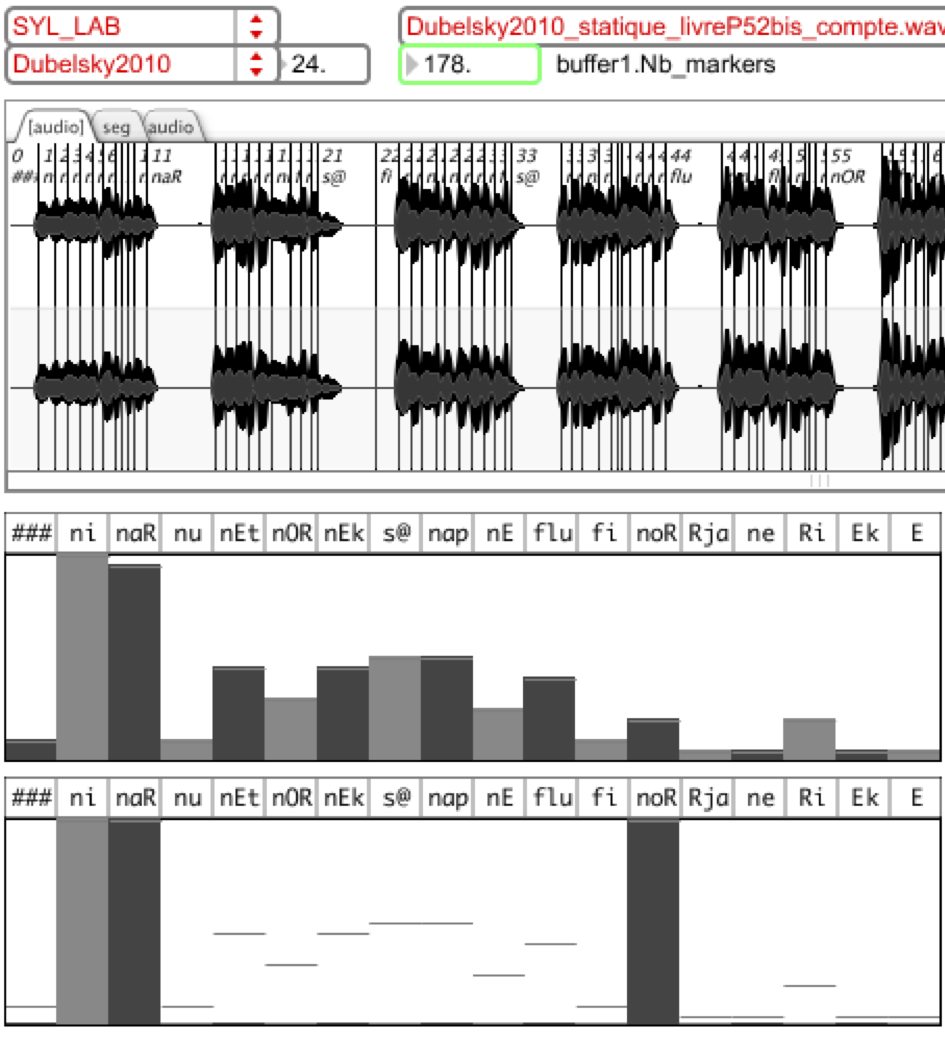

Speech synthesis in this context uses real-time generated texts and using IRCAM’s TTS Synthesis. In “Luna Park”, several technologies are combined to allow live text generations. The following figure shows a segmented recording in syllabus (on top), a histogram presenting frequency of appearance of each symbol (middle); and the same histogram guided for generation (low), for example by forcing equal appearance of syllables “ni”, “naR” and “noR”.

This interface, provided in MuBu, allows dislocating the natural frequencies of elements within an existing phrase. By varying such frequencies over time, one can obtain new structures and temporal flows on speech or instrumental sounds that result into coherent sonic and speech-like elements.

Gesture to Sound Mapping Examples

Mapping consists of associating gesture controllers to audio engines. Here, we provide several examples used in Luna Park:

Aerial Prosody

By linking the Hit energy of the right glove from the percussionist directly to the triggering of the synthesis engine, one can control the speech right by percussive movements of the musician. If the rotation of the left glove is linked in parallel to the transposition and intensity of the synthesis engine, then the combination gives the impression that the musician controls speech prosody with hand movements.

Speech memory

The history of instantaneous hand energies of the percussionist is used to control the memory order of the concatenative synthesis engine. This order is important since it amplifies the dependencies, over time, of triggered speech segments and thus makes it more or less understandable as speech to the audience. The more the percussionist provides energy in movement, the less memory is used; going from aleatory speech gestures to more comprehensible text.

To know more…

The musical research undertaken for this project related to live vocal synthesis and its relation to movement capture, can lead to further artistic and scientific applications. Curious readers are referred to the following articles:

- [Beller11b] Beller, G., Aperghis, G., « Gestural Control of Real-Time Concatenative Synthesis in Luna Park », P3S, International Workshop on Performative Speech and Singing Synthesis, Vancouver, 2011, pp. 23-28

- [Beller11c] Beller, G., « Gestural Control Of Real Time Concatenative Synthesis », ICPhS, Hong Kong, 2011

- [Beller11d] Beller, G., « Gestural Control of Real-Time Speech Synthesis in Luna Park », SMC, Padova, 2011

- [Beller11a] Beller, G., Aperghis, G., « Contrôle gestuel de la synthèse concaténative en temps réel dans Luna Park : rapport compositeur en recherche 2010 », 2011

They describe the movement sensors developped and employed in details along an analysis of generated data. Furthermore, the real-time audio engine is described along a specific novel prosodic transformation module that allows specific modificaiton of speech rate in real-time. Finally, the articles propose examples for “mapping” between sensors and audio rendering used in “Luna Park”.

For an update about SpokHands, see the Synekine Project.